International Symposium: AI Governance and Security

- Date:Fri, Dec 06, 2019

- Time:14:00-16:00

- Location:Daiwa House Ishibashi Nobuo Memorial Hall, Daiwa Ubiquitous Computing Research Building, Hongo Campus, The University of Tokyo

Map - Hosted by:

Institute for Future Initiatives, The University of Tokyo

- Co-hosted by:

Science, Technology, and Innovation Governance(STIG), UTokyo

Next Generation Artificial Intelligence Research Center, UTokyo - Language:

English-Japanese with the simultaneous interpretation

- Capacity:

100

Artificial intelligence (AI) and related technology networks are now being used as decision support in critical situations such as medical care, finance, and war. What are the responsibilities and roles of man and machines, and how should it be?

As technology is expanding globally, discussions on AI governance has been started at the international level such as OECD. In Japan, the Cabinet Office has announced the “human-centered AI social principles,” but how can the Japanese government, industry and universities contribute to these international discussions?

In this event, we invite Professor Toni Erskine (Australian National University and Cambridge University), who has been researching on moral agency and responsibility in world politics, and Dr. Taylor Reynolds (MIT), who is also an expert member of the AIGO (AI expert Group) at the Organization for Economic Co-operation and Development (OECD), to discuss the issues and prospects of AI governance and security with the University of Tokyo professors.

- 14:00Opening remarks

Prof. Hideaki Shiroyama, Institute for Future Initiatives and GraSPP, UTokyo

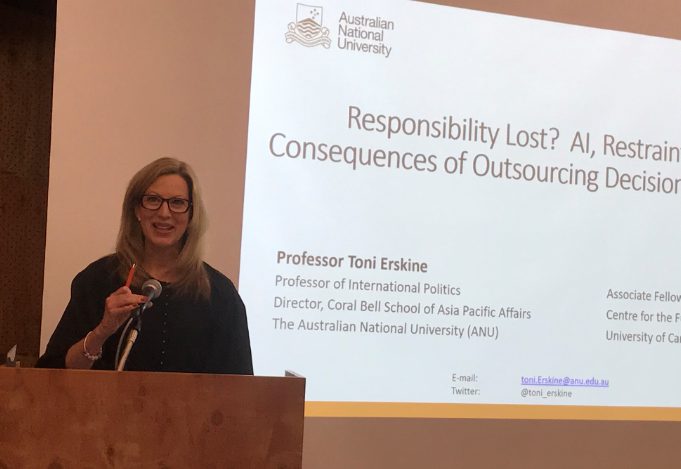

- 14:05Presentation 1:Professor Toni Erskine, Australian National University

“Responsibility Lost? AI, Restraint, and the Consequences of Outsourcing Decision-Making in War”

- 14:35Presentation 2:Dr. Taylor Reynolds, Technology Policy Director of the MIT Internet Policy Research Initiative (IPRI)

“AI Security and Governance – Challenges for countries at all stages of development”

- 15:05Panel discussion and Q&A

-Panelist: Prof. Toni Erskine, Dr. Taylor Reynolds, Prof. Yasuo Kuniyoshi, Prof. Hideaki Shiroyama

-Moderator:Arisa Ema, Assistant professor, Institute for Future Initiatives, UTokyo - 15:50Closing remarks

Professor Yasuo Kuniyoshi, Next Generation Artificial Intelligence Research Center and Graduate School of Information Science and Technology, UTokyo

Professor Toni Erskine

The prospect of weapons that can make decisions about whom to target and kill without human intervention – so-called ‘lethal autonomous weapons’, or, more colloquially, ‘killer robots’ – have engendered a huge amount of attention amongst both policy-makers and scholars. A frequently-articulated fear is that advanced AI systems of the future will have enormous potential for catastrophic harm and failure, and this will be beyond the power of states, or humanity generally, to control or correct. Proposed solutions include a moratorium on the development of fully autonomous weapons and pre-emptive international bans on their use. Debates over what should be done about this possible future threat are contentious, wide-ranging, and undoubtedly important. Yet, unfortunately, this attention to ‘killer robots’ has eclipsed a more immediate risk that accompanies the current use of AI in organised violence – a risk that has profound ethical, political, and even geo-political implications.

The concern that has motivated this talk is that existing AI-driven military tools – specifically, automated weapons that retain ‘humans in the loop’ and decision-support systems that assist targeting – risk eroding hard-won and internationally-endorsed principles of forbearance in the use of force because they change how we deliberate, how we act, and how we view ourselves as responsible agents. We need to ask how relying on AI in war affects how we – individually and collectively – understand and discharge responsibilities of restraint. This problem is not being discussed in academic, military, public policy, or media circles – and it should be.

Dr. Taylor Reynolds

The global economic system is one of sharp contrasts. We face significant economic risks such as rising poverty rates, trade frictions, and slowing global investment that threaten the living standards of billions of people. At the same time, technological leaps in fields such as machine learning (AI) and robotics deliver new and improved services, higher efficiency and lower costs to consumers. But these technologies also usher in disruptive economic and social change, as well as new security and privacy concerns. Amid these counter forces, policy makers ultimately want to deliver better economic growth and social benefit for more people.

That leaves policy makers questioning how we, as a society, can harness these benefits of computing technologies in a way that supports economic growth for all and improves social well-being. Are policy makers in both developed and developing countries sufficiently prepared to address new regulatory issues in an agile way? How can technologists be convinced to consider longer-term consequences as they build these new systems? How can governments, technologists and academics work together to achieve these goals?

Prof. Toni Erskine

Toni Erskine is Professor of International Politics and Director of the Coral Bell School of Asia Pacific Affairs at the Australian National University (ANU). She is also Editor of International Theory: A Journal of International Politics, Law, and Philosophy, Associate Fellow of the Leverhulme Centre for the Future of Intelligence at the University of Cambridge, and a Chief Investigator on the ‘Humanising Machine Intelligence’ Grand Challenge research project at ANU. She currently serves on the advisory group for the Google/United Nations Economic and Social Commission for Asia and the Pacific (ESCAP) ‘AI for the Social Good’ Research Network, administered by the Association for Pacific Rim Universities. Her research interests include the impact of artificial intelligence on responsibilities of restraint in war; the moral agency of formal organisations (such as states, transnational corporations, and intergovernmental organisations) in international politics; informal associations and imperatives for joint action in the context of global crises; cosmopolitan theories and their critics; the ethics of war; and, the responsibility to protect populations from mass atrocity crimes (R2P). She received her PhD from the University of Cambridge, where she was Cambridge Commonwealth Trust Fellow, and then British Academy Postdoctoral Fellow. Before coming to ANU, she held a Personal Chair in International Politics at the University of Wales, Aberystwyth, and was Professor of International Politics, Director of Research, and Associate Director (Politics and Ethics) of the Australian Centre for Cyber Security at UNSW Canberra (at the Australian Defence Force Academy).

Dr. Taylor Reynolds

Taylor Reynolds is the technology policy director of MIT’s Internet Policy Research Initiative. In this role, he leads the development of this interdisciplinary field of research to help policymakers address cybersecurity and Internet public policy challenges. He is responsible for building the community of researchers and students from departments and research labs across MIT, executing the strategic plan, and overseeing the day-to-day operations of the Initiative. Taylor’s current research focuses on three areas: leveraging cryptographic tools for measuring cyber risk, encryption policy, and international AI policy. Taylor was previously a senior economist at the OECD and led the organization’s Information Economy Unit covering policy issues such as the role of information and communication technologies in the economy, digital content, the economic impacts of the Internet and green ICTs. His previous work at the OECD concentrated on telecommunication and broadcast markets with a particular focus on broadband. Before joining the OECD, Taylor worked at the International Telecommunication Union, the World Bank and the National Telecommunications and Information Administration (United States). Taylor has an MBA from MIT and a Ph.D. in Economics from American University in Washington, DC.

On December 6, 2019, “International Symposium: AI Governance and Security” was held at Daiwa House Ishibashi Nobuo Memorial Hall, at the University of Tokyo. Artificial intelligence (AI) and related technology networks are now being used as decision support in critical situations such as medical care, finance, and war. What are the responsibilities and roles of humans and machines, and what should they be? As technology is expanding globally, discussions on AI governance have been started at the international level such as OECD. In Japan, the Cabinet Office has announced the “Social Principles of Human-Centric AI,” but how can the Japanese government, industry and universities contribute to these international discussions?

In this event, we invited Professor Toni Erskine (Australian National University and Cambridge University), Director of the Coral Bell School of Asia Pacific Affairs at ANU, CI on the Humanising Machine Intelligence research project, and Associate Fellow of the Leverhulme Centre for the Future of Intelligence, and Dr. Taylor Reynolds (MIT), the Technology Policy Director at MIT’s Internet Policy Research Initiative who is an expert member of the AIGO (AI expert Group) at the Organization for Economic Co-operation and Development (OECD), to discuss the issues and prospects of AI governance and security with the University of Tokyo professors.

First, Prof. Erskine presented “Responsibility Lost? AI, Restraint, and the Consequences of Outsourcing Decision-Making in War.” Today, the prospect of weapons that can make decisions about whom to target and kill without human intervention – so-called ‘lethal autonomous weapons’, or, more colloquially, ‘killer robots’ – have engendered a huge amount of attention amongst both policy-makers and scholars. However, rather than looking to the future, she raised concerns about existing automated weapons that retain “humans in the loop” and decision-support systems that currently assist targeting. She explored how our decision-making processes and activities are influenced and how we view ourselves as responsible agents when we use these technologies.

Prof. Erskine identified morally responsible agents in war as falling into three categories: (1) humans, (2) institutions and (3) intelligent artefacts. She especially raised the concern of “misplaced responsibility” when the third, “intelligent artefacts”, are used in the war. She raised several issues, for example, the risk of decision-making becoming a black box, the risk of lower restraint in war if machines are understood to make decisions, and the risk of humans anthropomorphizing artificial intelligence, resulting in “misplaced responsibility” when we mistakenly assume that machines can bear responsibility for human decisions and actions.

Especially, Prof. Erskine is concerned about people anthropomorphizing and even empathizing with AI/robots. She introduced one case of a military experiment in which a colonel who oversaw a mine-sweeping robot have its ‘limbs’ blown off ordered the experiment to be halted, saying, “the test was inhumane.” Such examples of empathizing with military robots and caring for machines, she argued, suggests that we are likely to misjudge their capacities in other ways as well. This is a risk on the battlefield. From this perspective, Prof. Erskine concluded the presentation by emphasizing the importance of philosophical discussion of moral agency and responsibility with respect to the use of AI in war.

The second speaker, Dr. Reynolds helps lead an interdisciplinary team of researchers from across MIT looking at the intersection of technology and policy, including AI. Today’s society faces a variety of challenges, including an aging population, trade conflicts, poverty, and income inequality. AI can be used to solve these problems. For example, it can be used to improve efficiency. Dr. Reynolds, who used to live in Paris, often waited two hours at a taxi stand. He said, with Uber and other ride-sharing services, waiting times have dropped dramatically. AI has also made remarkable contributions to healthcare.

On the other hand, AI has downsides. There are problems of explainability, and sometimes accuracy is more important than explainability and vice versa. For example, sometimes machines cannot explain why, but can tell for sure whether one has cancer or not. In these cases, many people would take accuracy over explainability, while university entrance decisions would likely benefit from more explainability, even at the expensive of accuracy.

Another problem is bias. The data used to train an AI model will bias the results. For example, there are various wedding styles in the world. If training data is tagged by Westerners who expect a bride and groom to look a certain way, the resulting models trained on the data will only learn about Western-style weddings, and miss those from other cultures that have different styles of dress. Other bias challenges include facial recognition systems where the training data can be baised to be better at predicting certain skin tones over others.

It is important to promote the implementation of AI while overcoming various challenges of other issues such as privacy and security. In particular, Dr. Reynolds pointed out that AI should be used to support not only developed countries but also developing countries.

After the presentation, Prof. Shiroyama and Prof. Kuniyoshi of the University of Tokyo came onto the stage and the panel discussed how to allocate responsibilities for a complex system consisting of humans and machines, and how to develop human resources capable of dealing with technical, legal and ethical issues brought by AI and robotics. At the University of Tokyo, the Next Generation Artificial Intelligence Research Center, where Prof. Kuniyoshi is the director, and the Institute for Future Initiatives, where Prof. Shiroyama is the vice-director, are researching social issues brought by AI and robotics. Since this is not an easy subject to study, it is important to discuss it with stakeholders from different disciplines and sectors. The panel discussion ended with the shared idea of collaborating with the Australian National University, MIT and the University of Tokyo to address these issues.

Moderated and written by Arisa Ema, Institute of Future Initiative